Intelligence with Integrity: The Case for a Carbon-Conscious AI Development

Illustration: Irhan Prabasukma.

Artificial Intelligence was supposed to make life easier, “smarter”. But, the competition to train ever-bigger models has produced an invisible but expanding environmental footprint. Each successive generation of AI requires exponential jumps in computing power, and each computing advance has a hidden cost—gargantuan energy usage and increased carbon emissions. What started as a digital revolution of the mind is quietly becoming an industrial-scale drain on the earth.

AI and Carbon Emissions, Hand-in-Hand

Recent research has put a number on this with disquieting accuracy. A thorough study by Tsinghua University, OpenCarbonEval: Carbon Emissions of AI Models (2024), reveals that AI carbon emissions increase very roughly in proportion to the compute needed to train a model. Based on data from 56 large models, the correlation between training compute and carbon emissions equivalent is very close to linear. The take-home is straightforward but chastening: double the compute, double the emissions.

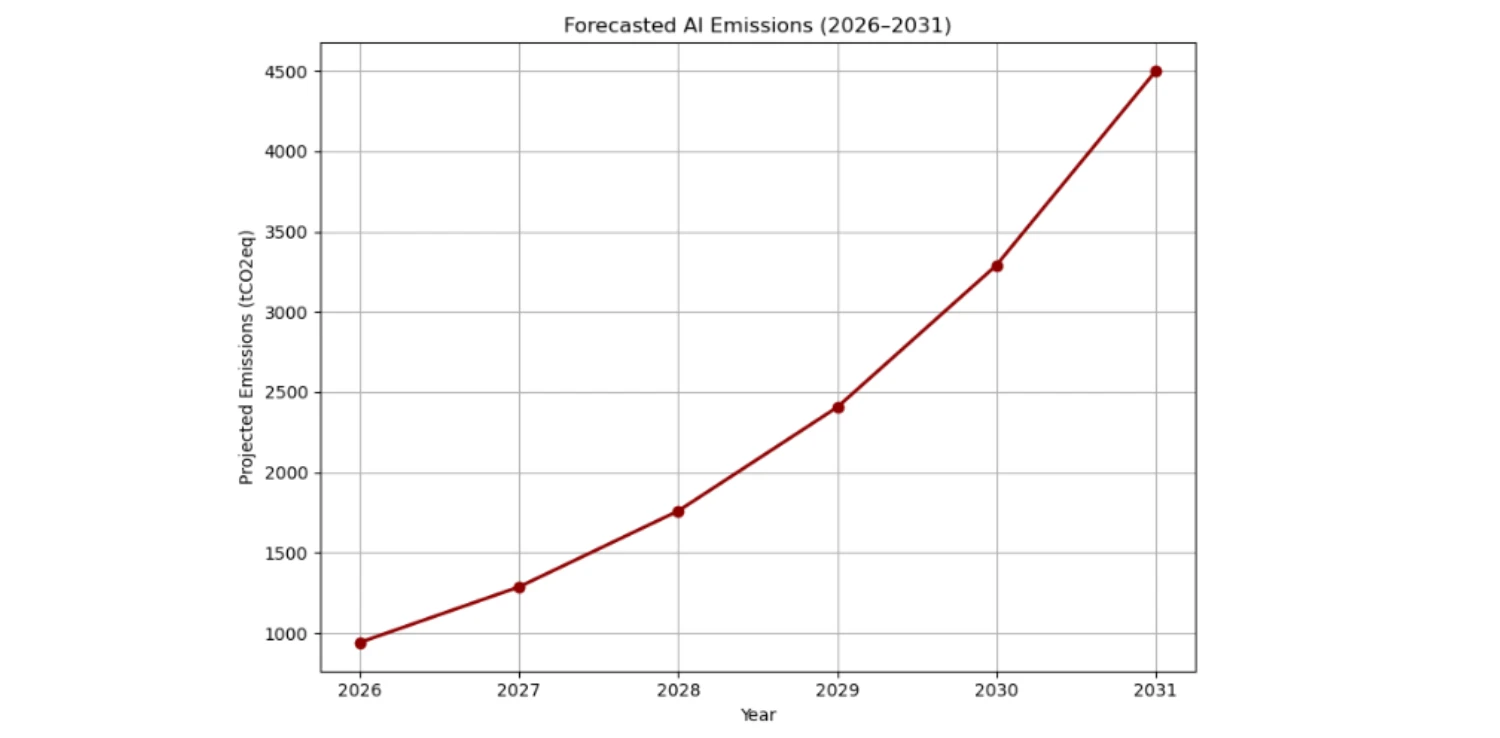

This linearity has far-reaching implications. Training a state-of-the-art model today can emit as much carbon as the lifetime output of hundreds of cars. Moreover, the trend is accelerating. AI’s projected emissions are expected to triple by 2031, assuming the current 40% annual growth rate in AI compute continues. The forecasted rise from about 1,000 to 4,500 tonnes of CO₂ equivalent illustrates the danger of unchecked scaling. The world is witnessing a repetition of industrial history, except this time the pollution comes not from smokestacks, but from algorithmic ambition.

Disproportionate Toll

Furthermore, what is ethically offensive is not merely the total amount of emissions, but also the intangibility of the process. The pollution is digital, dispersed, and usually outsourced to data centers in nations where electricity is still generated chiefly from fossil fuels.

Beyond emissions, the environmental toll of AI reaches deep into the material and spatial economy. The boom of data center construction is driven by large-scale land acquisition that often “repurposes” forests and natural habitats in developing countries or areas where costs are cheaper. There, local ecosystems and livelihoods are displaced by energy-intensive digital infrastructure. This expansion results in serious deforestation, habitat fragmentation, and community displacement in some cases.

Additionally, the massive water usage for cooling these servers further increases stress in already arid regions. Meanwhile, the demand for rare-earth minerals used in GPUs and semiconductors fuels environmentally destructive mining practices, including in supposedly protected areas and Indigenous lands.

In this sense, the environmental footprint of Artificial Intelligence is disproportionately paid. Profits and prestige go to technological giants in the Global North, but the environmental cost tends to be borne by poorer regions. It echoes the same patterns of extractivism and inequity of earlier industrial booms that it once promised to transcend. Now, the inequality occurs across digital and ecological layers of the planet.

Carbon-Compute Tax for AI

This poses an urgent ethical and policy question: Must AI firms be subject to similar environmental criteria as heavy industries? Must there be a carbon or compute tax on AI?

The concept might seem extreme, but the reasoning is not novel. Governments have been levying carbon taxes on industries such as aviation, steel production, and manufacturing for decades to make them bear some of the cost of pollution. The same could be applied to digital sectors whose emissions are by no means less real just because the products are intangible.

The carbon-compute tax would tie taxation to the computational power used in model training. Because compute is auditable and measurable, it provides an open-ended method of valuing emissions. In such a regime, companies scaling above a certain threshold—10²⁴ FLOPs, for instance—would be required to pay a marginal surtax that depends on the energy mix’s approximated carbon intensity. Not only would this dissuade model over-scaling, but it would also reward algorithmic efficiency, encouraging companies to develop within sustainable boundaries instead of pursuing infinite parameter expansion.

Policy, Accountability, and Transparency

Taxation alone, though, is insufficient. All AI labs should be mandated under a wider governance framework to report their emissions from training as part of regular environmental, social, and governance (ESG) reporting. Similar to corporate carbon reports, every large model would bear a public “carbon label” with the total compute consumed, the percentage of renewable energy used, and resulting emissions. Such disclosure would make accountability more democratic, enabling investors, policymakers, and consumers to make responsible ethical decisions. Transparency, of course, is the beginning of responsibility.

Aside from taxation and disclosure, the argument for environmental audits of big foundation models prior to deployment is compelling. While corporations have financial audits, AI developers could be asked to undergo third-party environmental audits that consider training emissions and the energy expense of being always on. The European Union’s pending AI Act, which already imposes risk assessments on “high-impact” systems, can quite easily incorporate carbon intensity as one of the defining metrics.

Still, regulation will not be enough. What the world really requires is a cultural change towards what could be described as “ethical efficiency”. Instead of constructing increasingly large models to demonstrate progress, “tech-bros” and firms need to seek innovations that do more with less. Technologies like parameter sparsity, retrieval-augmented generation, federated learning, and carbon-aware scheduling can cut energy consumption by large amounts without reducing capability. A few progressive companies such as DeepMind and Hugging Face are currently piloting such approaches. However, broader adoption will need firm policy pushes and public consciousness.

Toward Intelligence with Integrity

The argument about taxing and scrutinizing AI is not about limiting innovation—it is about reconciling intelligence with integrity. The industrial era learned the hard way: financial growth unregulated by environmental and social limits results in irrevocable harm. Thus, the digital era needs to learn better and earlier. After all, development is meant to improve our lives, creating a better future for all people and the planet.

Editor: Nazalea Kusuma

Co-create positive impact for people and the planet.

Amidst today’s increasingly complex global challenges, equipping yourself, team, and communities with interdisciplinary and cross-sectoral insights on sustainability-related issues and sustainable development is no longer optional — it is a strategic necessity to stay ahead and stay relevant.

Partha Roy

Partha is an independent researcher, writer, and tutor in ethical and social studies whose activity spans philosophical inquiry, editorial accuracy, and technological advancement. With academic background in economics and foreign trade management, he has developed a multidisciplinary professional career involving data annotation and prompt design. Through his writing, he promotes ethical innovation and inclusive growth in the era of intelligent machines.

Impacts of E-waste Pollution on Animals and Human Health

Impacts of E-waste Pollution on Animals and Human Health  Africa’s Solar Energy Surge: Why 2025 Was a Breakthrough Year

Africa’s Solar Energy Surge: Why 2025 Was a Breakthrough Year  Agrihoods: Integrating Farms and Urban Neighborhoods into Sustainable Communities

Agrihoods: Integrating Farms and Urban Neighborhoods into Sustainable Communities  Women in Waste Management: Asia’s Circularity Runs on Women. Its Policies Still Don’t

Women in Waste Management: Asia’s Circularity Runs on Women. Its Policies Still Don’t  Embracing the Business Value of Sustainability

Embracing the Business Value of Sustainability  American Farmers Call for Government Support Amidst PFAS Contamination

American Farmers Call for Government Support Amidst PFAS Contamination